Play

Dynamically assemble and match players

Dynamically assemble and match players

Connect cross-platform accounts & identity management

Grow and commercialize your game

Build a vibrant community

Track player progression and game state across platforms

Track, engage, and retain players

Visualize game metrics

Introduction to AccelByte Gaming Services (AGS)

Learn to use AGS with our demo game "Byte Wars"

Connect and get support with other members of the AccelByte Community

Submit and review tickets while directly connecting with AccelByte

Join our Discord for support, insights, and networking!

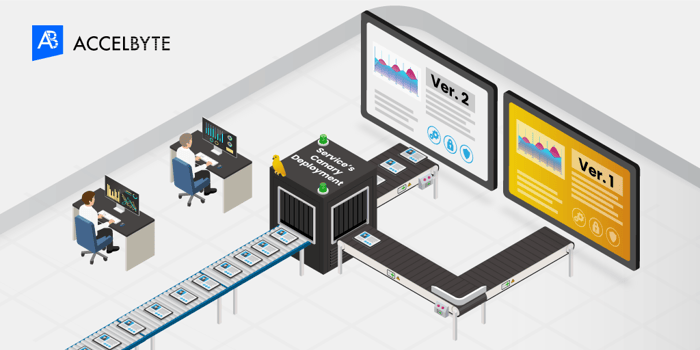

When we introduce new releases to the production environment, there are few things that we need to watch out for. Minding how the new releases affect the overall availability of the whole system and how they affect our end users are important before deploying a new version of our software. We can minimize the risk of getting unwanted effects by using a technique called Canary Deployment. Canary deployment is a technique of introducing a new release by exposing the new release only to a small subset of users first before progressively exposing it to all users. With canary deployment, we can tell whether our new version has a negative impact on our end user by analyzing the metrics, logs and monitoring the canary version.

So how does one do this canary deployment thing? If you are using Kubernetes, you probably want to leverage Kubernetes’s feature to create your own implementation of canary deployment. Unfortunately, we tried that with no success. One thing that set us back on using purely Kubernetes to manage canary deployment is that Kubernetes lacks some control on how much traffic a service should receive. To the best of our knowledge, in Kubernetes, the only way to manage the amount of traffic that a particular version of service receives is by controlling the replica ratio.

With Kubernetes limitation in mind, we try to find another way to implement the canary deployment. During our search for a solution, one of our team members was tasked to implement service mesh on our cluster to increase observability with our growing number of microservices. At the time, we found Istio. Istio is a completely open source service mesh that layers transparently onto the existing distributed microservices. Istio provides many useful features, and currently we are specifically using Istio rich routing rules to implement canary deployment. With Istio, we can implement canary deployment by creating two different Kubernetes deployments for each service. In the practice, when we have, for example, a service named Review, we can create Kubernetes deployment named Review and Review-canary. Beside creating two different deployments, we also have to attach labels for each deployment with its version. We can attach label: stable to Review’s deployment and label: canary to Review-canary’s deployment. Then we create the Istio VirtualService and Destination rule to control the traffic to the Review service:

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: review-virtualservice

spec:

hosts:

- review

http:

- route:

- destination:

host: review

subset: stable

weight: 80

- destination:

host: review

subset: canary

weight: 20

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

name: review-destinationrule

spec:

host: review

subsets:

- name: stable

labels:

version: stable

- name: canary

labels:

version: canary

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

name: review-destinationrule

spec:

host: review

subsets:

- name: stable

labels:

version: stable

- name: canary

labels:

version: canaryIn Destination Rule we can define which version belongs to which deployment, by using Subsets field and defining the value for name, labels, and version. After setting the Destination rules, we can set the specific rules inside Virtual Service by populating the weight field with the percentage of traffic that will be routed to the stable and canary version.

It isn't the end yet. Even after taking the advantages of Istio rich routing rules, we still need to do manual tasks to manage the lifecycle of canary deployments like deploying canary apps and deleting the old versions manually after all the traffics are successfully routed to the canary versions. It’s bearable to manually manage a small number of microservice. But what happens if you have a lot of microservices to manage? You will have to spend massive efforts to manage the canary lifecycle alone and with the tasks done manually, you introduce a new risk of human error to your release process. For this reason, we decided to use Flagger. Flagger is an open source Kubernetes operator that aims to solve this complexity. Flagger will automate the process of canary deployment using Istio for traffic shifting and Prometheus for canary analysis during rollout process. To use Flagger, we simply need to deploy Flagger to our cluster and create canary custom resource for each service. Here is an example of canary custom resource for Review service:

apiVersion: flagger.app/v1alpha3

kind: Canary

metadata:

name: review

namespace: test

spec:

targetRef:

apiVersion: extensions/v1beta1

kind: Deployment

name: review

progressDeadlineSeconds: 60

autoscalerRef:

apiVersion: autoscaling/v2beta1

kind: HorizontalPodAutoscaler

name: review

service:

port: 80

targetPort: 8080

gateways:

- public-gateway

hosts:

- review.example.com

match:

- uri:

prefix: /review/

skipAnalysis: false

canaryAnalysis:

interval: 1m

threshold: 5

maxWeight: 25

stepWeight: 50

metrics:

- name: request-success-rate

threshold: 99

interval: 30s

- name: request-duration

threshold: 500

interval: 30s

webhooks:

- name: load-test

url: http://flagger-loadtester.test/

timeout: 5s

metadata:

cmd: "hey -z 1m -q 100 -c 8 http://review.test:80/review/"With above configuration, the canary analysis will run for 2 minutes while validating the metrics every 30 seconds. We can determine the minimum time it took to run the analysis with this formula: interval*(maxWeight/stepWeight). After applying this canary object, Flagger generates all objects related to Review service that we need to run automated canary deployment:

# generated

deployment.apps/review-primary

horizontalpodautoscaler.autoscaling/review-primary

service/review

service/review-canary

service/review-primary

virtualservice.networking.istio.io/reviewAs you can see in the result provided above, in our case, Flagger generates a new deployment called review-primary that from now on will act as the primary deployment. Since all incoming traffic will go to review-primary from now on, what happens to the original deployment then? Flagger will downscale the original deployment to 0 and will scale it to 1 and acts as the canary version if Flagger controller detects any changes in any of the following objects:

We can trigger the canary deployment by changing the image of the original deployment:

kubectl -n test set image deployment/review \

review=dockerhub.io/review:1.8.0Flagger will detect the change and start the analysis:

kubectl -n test describe canary/review

Events:

New revision detected review.test

Scaling up review.test

Waiting for review.test rollout to finish: 0 of 1 updated replicas are available

Advance review.test canary weight 25

Advance review.test canary weight 50

Copying review.test template spec to review-primary.test

Waiting for review-primary.test rollout to finish: 1 of 2 updated replicas are available

Promotion completed! Scaling down review.testAnd there we have it. Those are all we need to reduce the risk during the new version release process to the production environment. Flagger also offers a lot more than what we discuss in this article, so make sure to check their official page.

If you have any questions or if you need a help regarding this implementation with our services, please contact us through support@accelbyte.io.

Reach out to the AccelByte team to learn more.