Play

Dynamically assemble and match players

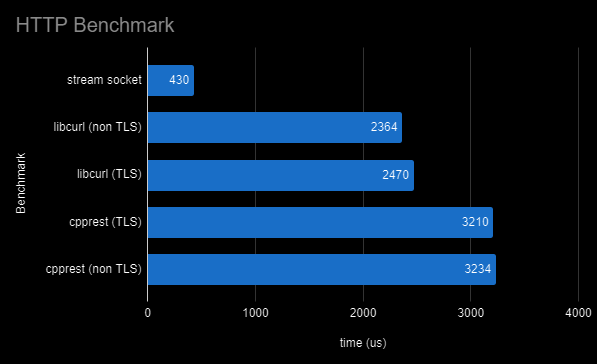

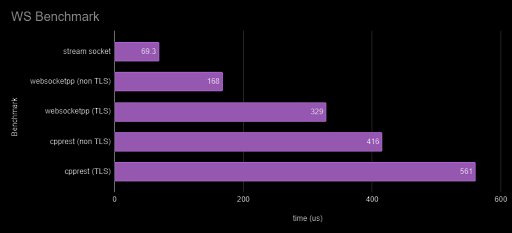

We have a story about benchmarking libraries before making them a dependency for our project. One day, after a few weeks creating our C++ SDK —that is the SDK that’s going to be engine agnostic— we stumbled upon a problem: the dependency tree was huge, which made the build time uncomfortably long.

At that point we used cpprestsdk as the backend for our HTTP and WebSocket worker. Executing the export command gave us a folder with 1GB in size, which is obviously too big for an SDK. We wanted it to be lean, so we search for another library out there to replace cpprestsdk. In order to make sure that the replacement is comparable, we did some benchmarks.

We measured the total round trip time, from send to receive, including the wait time for the server to respond. Local server was used, and a TCP socket benchmark was also done to find the baseline of the measurement therefore we’re sure that the server wait time is negligible.

Most of the test was done by preparing the data once and sending multiple requests to the server.

We used google-benchmark to run the benchmark, it’s a nice little library that’s proved to be useful in this case. We tested both the secure and the insecure connection.

The tests were done locally using the following software:

There’s no point in doing all this if you cannot reproduce the result. To clear up your doubts, I’m going to share the guide to set up the benchmark environment.

Preparation

Local HTTP Server

Local WS Server

Here’s some suggested configuration for nginx.conf file. You can follow it or come up with your own.

#user nobody;

worker_processes 1;

#error_log logs/error.log;

#error_log logs/error.log notice;

#error_log logs/error.log info;

#pid logs/nginx.pid;

events {

worker_connections 1024;

}

http {

include mime.types;

default_type text/plain;

#log_format main '$remote_addr - $remote_user [$time_local] "$request" '

# '$status $body_bytes_sent "$http_referer" '

# '"$http_user_agent" "$http_x_forwarded_for"';

#access_log logs/access.log main;

sendfile on;

#tcp_nopush on;

#keepalive_timeout 0;

keepalive_timeout 65;

#gzip on;

server {

listen 62001;

server_name localhost;

location / {

root html;

index index.html index.htm;

}

#error_page 404 /404.html;

# redirect server error pages to the static page /50x.html

#

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root html;

}

}

# HTTPS server

server {

listen 62003 ssl;

server_name localhost;

ssl_certificate ../ssl/localhost.pem;

ssl_certificate_key ../ssl/localhost-key.pem;

ssl_session_cache shared:SSL:1m;

ssl_session_timeout 5m;

ssl_ciphers HIGH:!aNULL:!MD5;

ssl_prefer_server_ciphers on;

location / {

root html;

index index.html index.htm;

}

}

}

And here’s the suggested SimpleHTTPSServer.py file using port 62005

import ssl

try:

from BaseHTTPServer import HTTPServer

except:

from http.server import HTTPServer

try:

from SimpleHTTPServer import SimpleHTTPRequestHandler

except:

from http.server import SimpleHTTPRequestHandler

if __name__ == "__main__":

httpd = HTTPServer(('', 62005), SimpleHTTPRequestHandler)

httpd.socket = ssl.wrap_socket(httpd.socket, server_side=True, certfile='./localhost.pem',

keyfile='./localhost-key.pem', ssl_version=ssl.PROTOCOL_TLSv1)

httpd.serve_forever()

That’s it for the environment, now let’s see how the results come up.

Here’s the edited output of google-benchmark for completeness.

Run on (16 X 3194 MHz CPU s)

CPU Caches:

L1 Data 32K (x8)

L1 Instruction 65K (x8)

L2 Unified 524K (x8)

L3 Unified 8388K (x2)

-------------------------------------------------------------------------------------------------------------------

Benchmark HTTP Time CPU Iterations

-------------------------------------------------------------------------------------------------------------------

stream_socket_get_and_read_response/repeats:20_mean 432 us 181 us 20

stream_socket_get_and_read_response/repeats:20_median 430 us 180 us 20

stream_socket_get_and_read_response/repeats:20_stddev 4.89 us 25.7 us 20

http_cpprest_get_and_read_response/repeats:10_mean 3240 us 227 us 10

http_cpprest_get_and_read_response/repeats:10_median 3234 us 203 us 10

http_cpprest_get_and_read_response/repeats:10_stddev 21.0 us 60.4 us 10

https_cpprest_get_and_read_response/repeats:10_mean 3217 us 239 us 10

https_cpprest_get_and_read_response/repeats:10_median 3210 us 227 us 10

https_cpprest_get_and_read_response/repeats:10_stddev 17.0 us 51.6 us 10

http_libcurl_get_and_read_response/repeats:20_mean 2365 us 121 us 20

http_libcurl_get_and_read_response/repeats:20_median 2364 us 122 us 20

http_libcurl_get_and_read_response/repeats:20_stddev 9.04 us 14.1 us 20

https_libcurl_get_and_read_response/repeats:20_mean 2474 us 185 us 20

https_libcurl_get_and_read_response/repeats:20_median 2470 us 187 us 20

https_libcurl_get_and_read_response/repeats:20_stddev 12.4 us 18.7 us 20

http_libcurl_reinit_get_and_read_response/repeats:20_mean 207871 us 1539 us 20

http_libcurl_reinit_get_and_read_response/repeats:20_median 208045 us 1484 us 20

http_libcurl_reinit_get_and_read_response/repeats:20_stddev 964 us 638 us 20

-------------------------------------------------------------------------------------------------------------------

Benchmark Websocket Time CPU Iterations

-------------------------------------------------------------------------------------------------------------------

stream_socket_websocket_send_and_read_response/repeats:20_mean 69.0 us 20.4 us 20

stream_socket_websocket_send_and_read_response/repeats:20_median 69.3 us 20.3 us 20

stream_socket_websocket_send_and_read_response/repeats:20_stddev 1.81 us 2.73 us 20

ws_cpprest_send_message_and_read_response/repeats:20_mean 417 us 403 us 20

ws_cpprest_send_message_and_read_response/repeats:20_median 416 us 401 us 20

ws_cpprest_send_message_and_read_response/repeats:20_stddev 8.91 us 15.7 us 20

wss_cpprest_send_message_and_read_response/repeats:20_mean 563 us 543 us 20

wss_cpprest_send_message_and_read_response/repeats:20_median 561 us 544 us 20

wss_cpprest_send_message_and_read_response/repeats:20_stddev 6.86 us 22.4 us 20

wspp_send_and_read_response/repeats:20_mean 171 us 169 us 20

wspp_send_and_read_response/repeats:20_median 168 us 167 us 20

wspp_send_and_read_response/repeats:20_stddev 7.89 us 8.20 us 20

wspp_secure_send_and_read_response/repeats:20_mean 330 us 328 us 20

wspp_secure_send_and_read_response/repeats:20_median 329 us 330 us 20

wspp_secure_send_and_read_response/repeats:20_stddev 6.08 us 7.82 us 20

And that’s it, at the end of the day we confidently switch to libcurl + websocketpp since it’s proven to produce smaller-sized result and faster process, as you can see in the provided test results!

If you need a further guidance regarding this topic, please reach out to us at support@accelbyte.io.

Reach out to the AccelByte team to learn more.